While simultaneously promoting the initiative all across the world, Alphabet Inc. is warning staff about how they use chatbots, including its own Bard.

Google has instructed staff not to submit sensitive information into AI chatbots, citing a long-standing policy on data security.

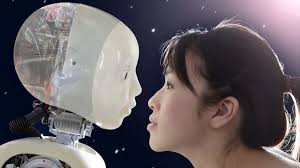

The so-called chatbots, like Bard and ChatGPT, are human-sounding software applications that engage in discussions with users and respond to a wide range of questions. Researchers discovered that similar AI may duplicate the data it ingested during training, constituting a leak risk. Human reviewers may access the discussions.

According to several of the participants, Alphabet also warned its engineers against using chatbot-generated code directly.

When contacted for a response, the business stated that although Bard can recommend undesirable code, it nevertheless benefits programmers. Additionally, Google stated that it sought to be open and honest about the limitations of its technology.

The worries demonstrate Google’s desire to prevent commercial harm from the software it released in opposition to ChatGPT. Billion-dollar investments and still-unknown advertising and cloud revenue from future AI programs are on the line in Google’s competition with ChatGPT’s supporters OpenAI and Microsoft Corp.

Google’s warning also reflects what is becoming a security norm for businesses: cautioning staff against using chat apps that are accessible to the general public.

An increasing number of organizations around the world have put restrictions on AI chatbots, including Samsung, Amazon.com, and Deutsche Bank. Reportedly, Apple, which did not respond to demands for comment, has done the same.

According to a poll conducted by the networking site Fishbowl of roughly 12,000 respondents, including those from leading U.S.-based organizations, 43% of professionals were utilizing ChatGPT or other AI applications as of January, frequently without informing their managers.

According to Insider, Google informed the workers testing Bard prior to its introduction not to provide it with internal information by February. As a catalyst for innovation, Google is currently distributing Bard to more than 180 countries and in 40 languages. Its warnings also apply to its coding suggestions.

Following a Politico story on Tuesday that the business was delaying Bard’s EU launch this week in order to gather more information on the chatbot’s effects on privacy, Google told reporters that it has had in-depth discussions with Ireland’s Data Protection Commission and is responding to authorities’ inquiries.

Concerns around sensitive information

Such technology promises to significantly speed up activities by drafting emails, papers, and even the software itself. But this information might also contain falsehoods, private information, or even portions of “Harry Potter” books that are protected by copyright.

Don’t mention sensitive or personal information in your Bard discussions, according to a Google privacy notice that was revised on June 1.

To overcome these issues, some businesses have created software. For instance, Cloudflare, a company that protects websites from cyberattacks and provides other cloud services, is promoting a feature that allows companies to categorize and limit the amount of data that may be shared externally.

The conversational tools that Google and Microsoft are providing to business customers will cost more money but won’t feed data into open-source AI models. Users can choose to remove their discussion history, which is saved by default in Bard and ChatGPT.

According to Yusuf Mehdi, Microsoft’s chief marketing officer for consumers, it “makes sense” that businesses wouldn’t want their employees using public chatbots for business purposes.

Mehdi compared Microsoft’s free Bing chatbot with its enterprise products and said, “Companies are taking a suitably conservative standpoint. “Over there, our rules are much stricter.”

Microsoft declined to respond to a question about whether it forbids employees from entering private data into any AI algorithms, including its own. However, another executive there told reporters that he personally limited his use.

Cloudflare CEO Matthew Prince compared inputting private information into chatbots to “letting a bunch of Ph.D. students loose in all of your private records.”