Google, a subsidiary of Alphabet Inc., provided fresh information on its supercomputers on Tuesday, claiming that they are quicker and more energy-efficient than equivalent systems from Nvidia Corp.

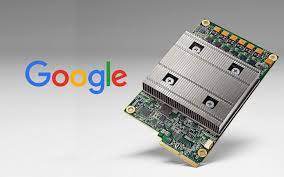

Tensor Processing Unit, or TPU, is a unique semiconductor that Google created. More than 90% of the work done by the business on artificial intelligence training—the process of feeding data through models to make them useful at tasks like producing text that is similar to human speech or creating images—uses those chips.

The Google TPU is now in its fourth version. In a scientific document published on Tuesday, Google describes how it connected more than 4,000 of the chips into a supercomputer using optical switches it invented on its own.

Because the so-called large language models that drive products like Google’s Bard or OpenAI’s ChatGPT have grown exponentially in size and are now much too large to fit on a single chip, improving these connections has emerged as a key area of competition among businesses that create AI supercomputers.

Instead, the models must be distributed among tens of thousands of chips, which must then cooperate to train the model over the course of many weeks. The PaLM model from Google, which is the company’s largest language model to date that has been made publicly available, was trained over the course of 50 days using two of the 4,000-chip supercomputers.

According to Google, their supercomputers make it simple to instantly adjust connections between chips in order to minimize issues and improve performance.

In a blog post discussing the system, Google Fellow Norm Jouppi and Google Distinguished Engineer David Patterson stated that “circuit switching makes it straightforward to route around faulty components.” Its adaptability even enables us to modify the supercomputer interconnects structure in order to boost a machine learning model’s performance.

Google’s supercomputer has been operational within the firm since 2020 in a data facility in Mayes County, Oklahoma, even though the company is just now disclosing information about it. According to Google, the startup Midjourney utilized the technique to hone a model that creates new visuals after being fed a short passage of text.

According to the report, Google’s chips are up to 1.7 times faster and 1.9 times more energy-efficient than a system based on the Nvidia A100 chip, which was available at the same time as the fourth-generation TPU, for comparably sized systems.

A representative for Nvidia declined to comment.

Because the H100 chip was released after Google’s and uses more modern manufacturing techniques, Google claimed that it did not compare its fourth generation to Nvidia’s current flagship H100 processor.

Although Jouppi told reporters that Google had “a solid pipeline of future processors,” he only indicated that it might be developing a new TPU to compete with the Nvidia H100.