Leading tech firms agreed on Friday to voluntarily take “reasonable precautions” to stop the use of artificial intelligence tools to rig national and international elections.

At the Munich Security Conference, executives from Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, and TikTok announced a new framework for handling AI-generated deepfakes that are intended to deceive voters. Elon Musk’s X is among the twelve other businesses that have signed on to the agreement.

Nick Clegg, president of global affairs at Meta, the parent company of Facebook and Instagram, stated in an interview conducted before the summit that “everyone recognizes that no one tech company, no one government, and no one civil society organization can deal with the advent of this technology and its possibly nefarious use on their own.”

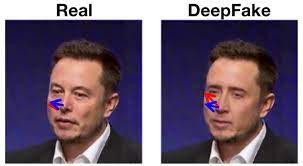

Aiming to “deceptively fake or alter the appearance, voice, or actions of political candidates, election officials, and other key stakeholders in a democratic election, or that provide false information to voters about when, where, and how they can lawfully vote,” the agreement is primarily symbolic but targets increasingly realistic AI-generated images, audio, and video.

Laptops 1000The businesses have not promised to outlaw or delete deepfakes. Rather, the agreement describes the steps they will take to identify and mark misleading AI material when it is produced or shared on their platforms. It states that when the information begins to circulate, the businesses will exchange best practices and offer “swift and proportionate responses.”

Although a wide range of businesses were probably won over by the agreements’ ambiguity and absence of legally obligatory criteria, disgruntled supporters were hoping for more concrete guarantees.

As senior associate director of the Elections Project at the Bipartisan Policy Centre Rachel Orey put it, “The language isn’t quite as strong as one might have expected.” “I believe it’s important to give credit where credit is due and recognize that the corporations do have a stake in ensuring that their products aren’t used to sabotage honest and transparent elections. However, it is entirely voluntary, and we will be monitoring their actions.

Every business “quite rightly has its own set of content policies,” according to Clegg. He clarified, “This is not an attempt to put everyone in a straitjacket.” In any case, nobody in the business believes that you can handle a whole new technology paradigm by ignoring issues, attempting to play whack-a-mole, and looking for anything that you believe could mislead someone.

In addition, some American and European political figures attended the announcement on Friday. Even while such an agreement can’t be comprehensive, according to Vice President of the European Commission Vera Jourova, “it contains very impactful and positive elements.” She also cautioned that misinformation powered by AI would lead to “the end of democracy, not only in the EU member states” and asked other politicians to assume responsibility for not using AI technologies deceitfully.

Over 50 nations are scheduled to have national elections in 2024, coinciding with the accord reached at the annual security gathering in the German city. This has already been done by Bangladesh, Taiwan, Pakistan, and most recently, Indonesia.

Artificial intelligence-generated robocalls mimicking the voice of U.S. President Joe Biden attempted to dissuade voters from casting ballots in last month’s primary election in New Hampshire, as an example of the first attempt at election meddling.

Laptops 1000A few days before the November elections in Slovakia, audio recordings created by AI mimicked a candidate talking about plans to rig the results and boost beer prices. As they circulated on social media, fact-checkers hurried to disprove them.

Politicians have also dabbled in technology, from integrating AI-generated imagery into advertisements to interacting with voters through chatbots.

Platforms are urged by the agreement to “pay attention to context and in particular to safeguarding artistic, satirical, political, and educational expression.”

The companies aim to educate the public about how to avoid falling for AI fakes and will prioritize being transparent with users about their rules, according to the statement.

In addition to working to identify and classify AI-generated content so that social media users can determine whether or not what they’re viewing is real, the majority of corporations have previously stated that they are implementing protections on their own generative AI tools, which can change visuals and sounds. However, a lot of those suggested fixes haven’t been implemented yet, and the businesses are under pressure to take further action.

This pressure is especially strong in the United States, where Congress has not yet passed legislation governing AI in politics, allowing businesses to essentially run their affairs.

Recent confirmation from the Federal Communications Commission states that AI-generated audio snippets used in robocalls are illegal; however, this does not apply to audio deepfakes that are shared on social media or in political commercials.

Several social media platforms currently have procedures in place to prevent misleading posts on election processes, whether or not they are produced by AI. According to Meta, it eliminates fraudulent posts intended to obstruct someone’s ability to participate in civic life as well as inaccurate information regarding “the dates, locations, times, and methods for voting, voter registration, or census participation.”

The agreement appears to be a “positive step,” according to Jeff Allen, co-founder of the Integrity Institute and a former Facebook data scientist. However, he would still like to see social media companies take additional steps to combat misinformation, like developing content recommendation systems that don’t put engagement first.

The agreement is “not enough,” according to Lisa Gilbert, executive vice president of Public Citizen, who also stated that AI firms should “hold back technology” like hyper-realistic text-to-video generators “until there are substantial and adequate safeguards in place to help us avert many potential problems.”

Notable signatories of the agreement on Friday include the security companies McAfee and TrendMicro, chip designer Arm Holdings, chatbot developers Anthropic and Inflection AI, voice-clone startup ElevenLabs, and Stability AI, which created the image-generator Stable Diffusion.

Midjourney, another well-known AI image generator, is conspicuously missing. A request for comment on Friday was not immediately answered by the San Francisco-based startup.

One of the surprises of Friday’s agreement was the inclusion of X, who had not been mentioned in an earlier announcement about the pending settlement. Following his takeover of the former Twitter, Musk drastically reduced the number of content-moderation teams and identified himself as a “free speech absolutist.”

“Every citizen and company has a responsibility to safeguard free and fair elections,” stated Linda Yaccarino, CEO of X, in a statement released on Friday.

She declared, “X is committed to doing its share, working with peers to counter AI threats while simultaneously preserving free speech and enhancing transparency.”